DSPM for AI - Part 3: Monitoring & Governance

Publishing sensitivity labels, deploying Copilot-aware DLP, and running Data Risk Assessments give you a strong foundation. But security isn’t a one-time setup. To keep Copilot secure, you need continuous monitoring and governance processes.

DSPM for AI provides the tools to do this: Activity Explorer, Apps & Agents, and recurring assessments. Together, they help you spot issues early, monitor how sensitive data interacts with Copilot, and ensure governance becomes part of daily operations.

Scenario: Prompt monitoring with Activity Explorer

A finance analyst repeatedly uses Copilot to query documents labeled Highly Confidential.

- The risk: Even though the analyst has access, repeated usage of financial data in AI prompts could indicate risky behavior or misuse.

- How DSPM helps: Activity Explorer shows which prompts and responses contained sensitive data, allowing security or compliance teams to investigate patterns and take action.

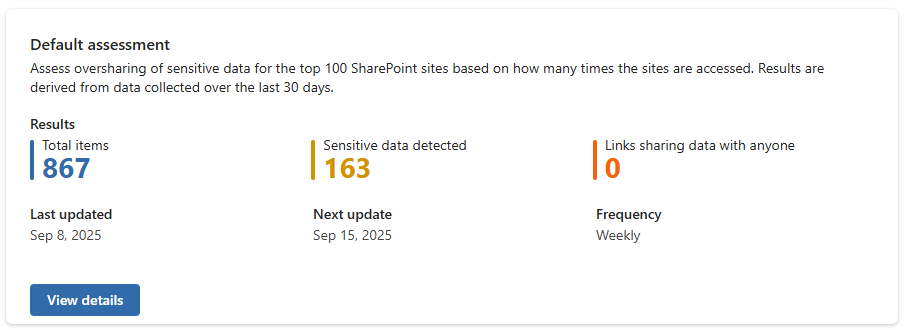

Ongoing monitoring with Data Risk Assessments

- Data Risk Assessments are updated weekly and show trends in oversharing, labeling, and site-level risks.

- Export results to track changes over time and escalate findings to data owners.

- Treat assessments as health checks — not just one-time reports.

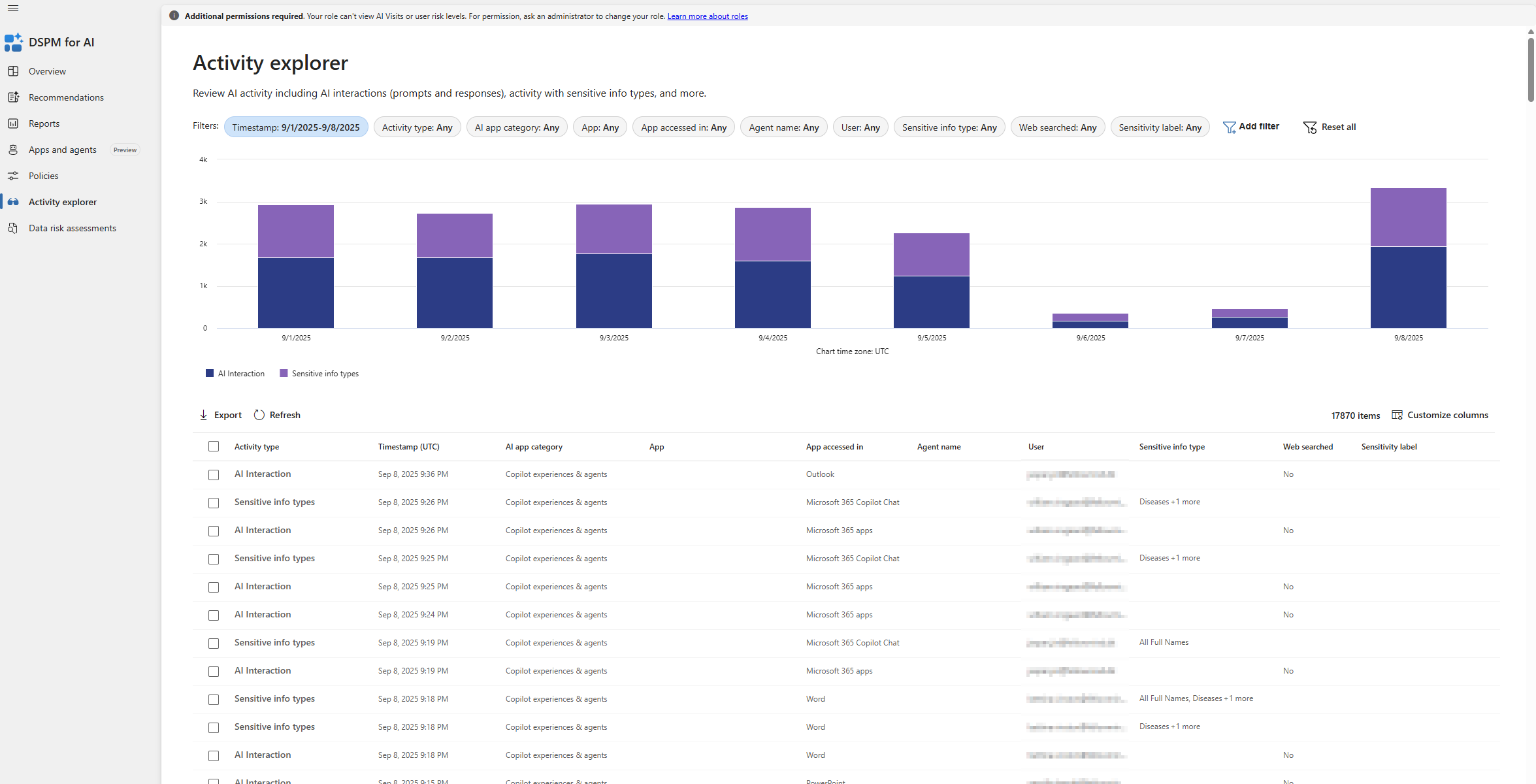

Monitoring Copilot usage with Activity Explorer

- Activity Explorer tracks Copilot prompts and responses that involve sensitive data.

- This gives visibility into who is using Copilot, what data is involved, and which sensitivity labels are applied.

- Limitations: only 30 days of history are available, regardless of license.

- Use this to validate that DLP rules are working and to detect unusual or risky prompt behavior.

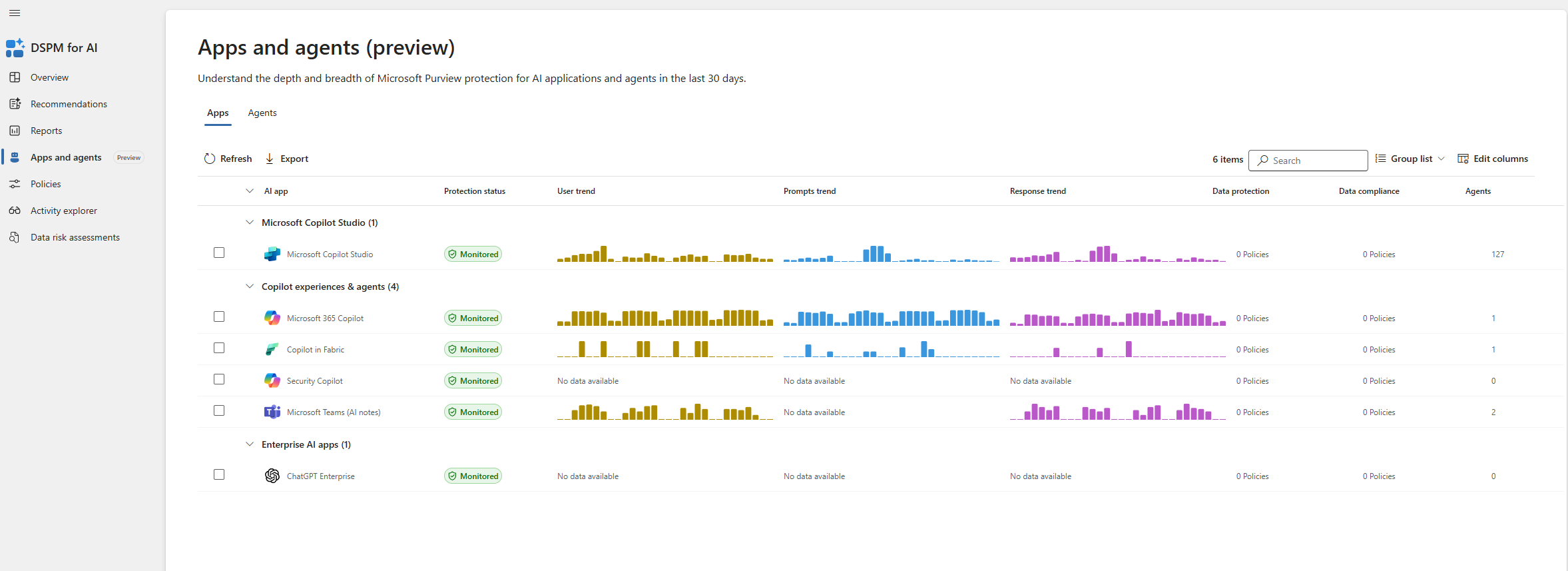

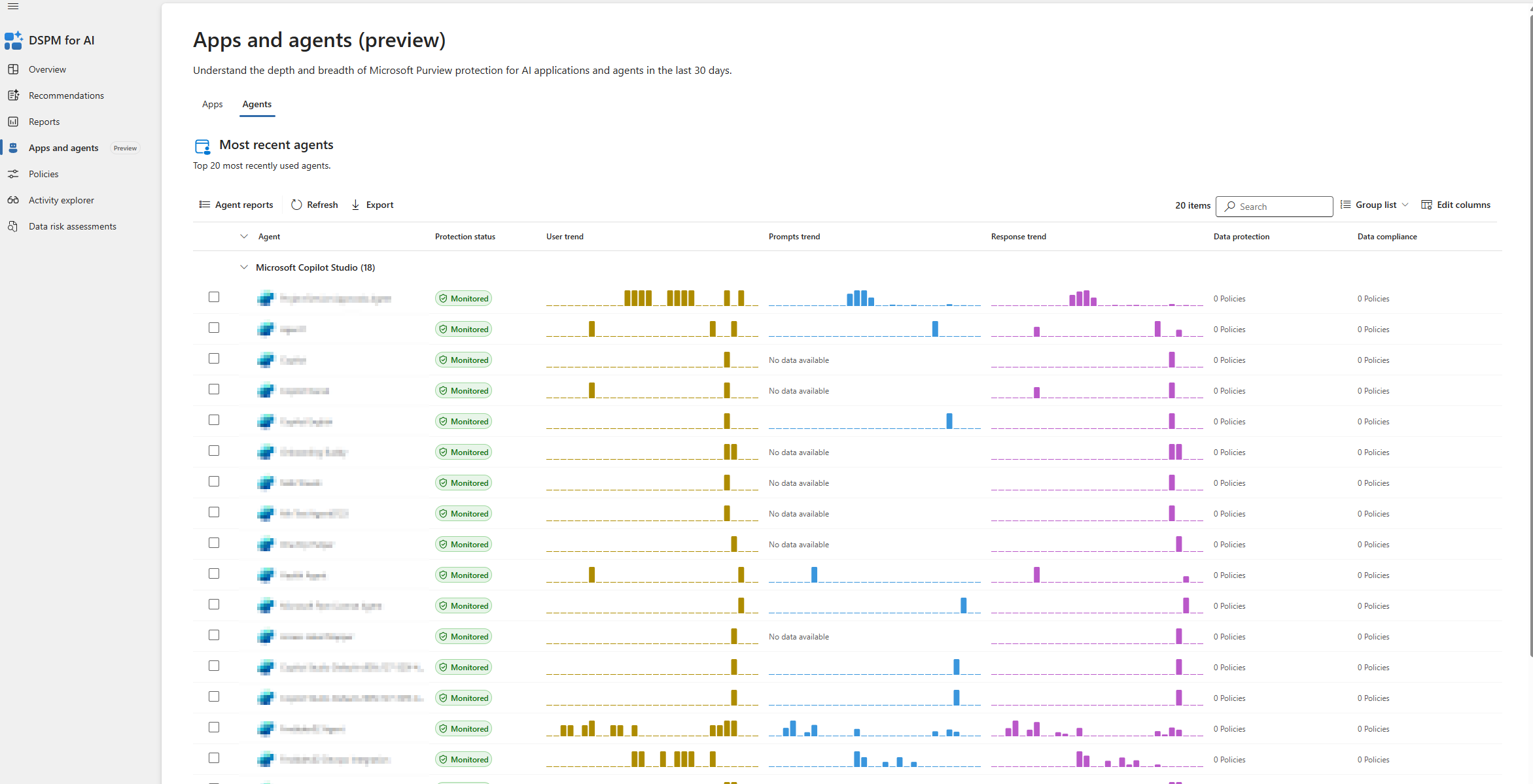

Monitoring Apps & Agents

- DSPM for AI also provides visibility into AI apps and agents integrated with Microsoft 365.

- You can see:

- Which apps/agents are active

- What data they request access to

- Whether they are interacting with sensitive or overshared content

- This helps enforce plugin governance and ensures only approved AI extensions access tenant data.

Building governance processes

Monitoring only works if it’s backed by process. Define:

- Who reviews DSPM insights (SOC, compliance, or data governance teams)

- How often reviews happen (weekly for risk assessments, daily/weekly for Activity Explorer)

- What happens with findings (remediation, escalation, or policy tuning)

- How improvements are tracked (dashboards, KPIs, reporting back to stakeholders)

Wrap-up

With monitoring and governance in place, DSPM for AI evolves from deployment into continuous protection. You’re not only deploying policies and finding risks — you’re actively managing them as Copilot adoption grows.

From here, the natural extension is to look beyond Microsoft 365 and address Shadow AI. That’s where Endpoint DLP comes in: stopping sensitive data from leaving managed environments and landing in external AI tools like ChatGPT.