DSPM for AI - Part 1: Deploy Sensitivity Labels, DLP & Retention Policies

Copilot is only as safe as the data it can see. The first step in securing Microsoft 365 Copilot is to make sure your data posture is ready. That starts with sensitivity labels, Copilot-aware DLP policies, and retention settings for Copilot prompt history.

At the time this post was written, restricting Microsoft 365 Copilot using Sensitivity Labels to prevent processing, was the only DLP option.

This has since evolved.

You can now configure Data Loss Prevention policies for Copilot prompts, based on Sensitive information types (SITs). Allowing you to block on Copilot prompts based directly on detected sensitive data such as PII, financial data, or custom classifiers.

This means:

- Copilot protection no longer depends exclusively on labels

- SIT-based detection can prevent Copilot prompts from processing sensitive but unlabeled data

- Sensitivity labels remain a strong governance and access control mechanism, but are no longer a hard pre-requisite for Copilot DLPs

In practice, the strongest approach is to combine both:

- SIT-based DLP for immediate risk reduction

- Sensitivity labels for long-term governance, access control and DSPM insights

Real-world scenario: Copilot blocked by DLP

A sales manager asks Copilot to summarize an Excel file that contains customer GDPR/PII.

- The risk: If Copilot processes this file, sensitive information could be surfaced in the prompt response.

- How DSPM + DLP help:

- The file is labeled Highly Confidential.

- A Copilot-aware DLP policy detects the label when Copilot tries to process the file.

- The DLP policy blocks Copilot from using the content, or warns the user depending on policy configuration.

- The attempt is logged in Purview Audit, giving security teams visibility into the event.

Setting up the baseline

This is exactly the type of risk DSPM for AI is designed to address.

To build the baseline, you’ll need three things in place:

- Sensitivity labels to classify your data.

- Scope sensitive information types (SITs) that you want to block from prompts

- Copilot-aware DLP policies to enforce protection, based on labels and SITs

- Retention settings for Copilot prompt history.

Together, these steps ensure Copilot respects your data posture — and that you can prove it through auditing and governance.

Step 1: Review & Publish Sensitivity Labels

If you don’t have labels in place, use the one-click setup to deploy the baseline labels.

Where to find the one-click setup

Option 1 (banner):

- Purview portal → Solutions → DSPM for AI → Overview

- A top banner will appear with the option to deploy labels.

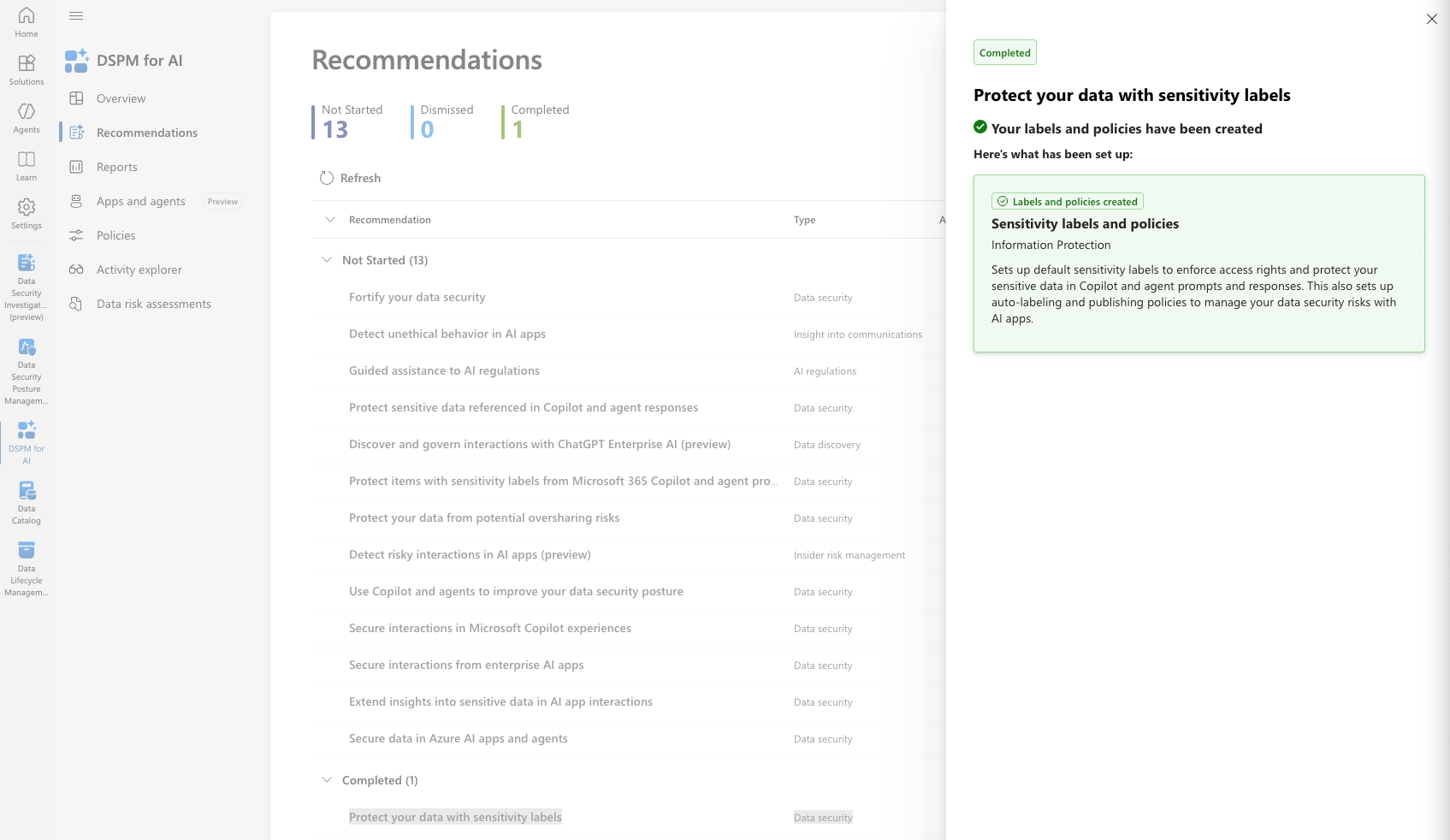

Option 2 (recommendations):

- Purview portal → Solutions → DSPM for AI → Recommendations

- Select Protect your data with sensitivity labels.

- Follow the instructions until the recommendation shows as Complete.

When deployed, the recommendation will show as complete

- If sensitivity labels were deployed before enabling DSPM for AI, the recommendation will already be marked Complete and the one-click setup will not be available.

- If all sensitivity labels are deleted, the recommendation automatically recreates the baseline labels.

- To remove them permanently, create and publish a new custom set of labels first, then delete the baseline

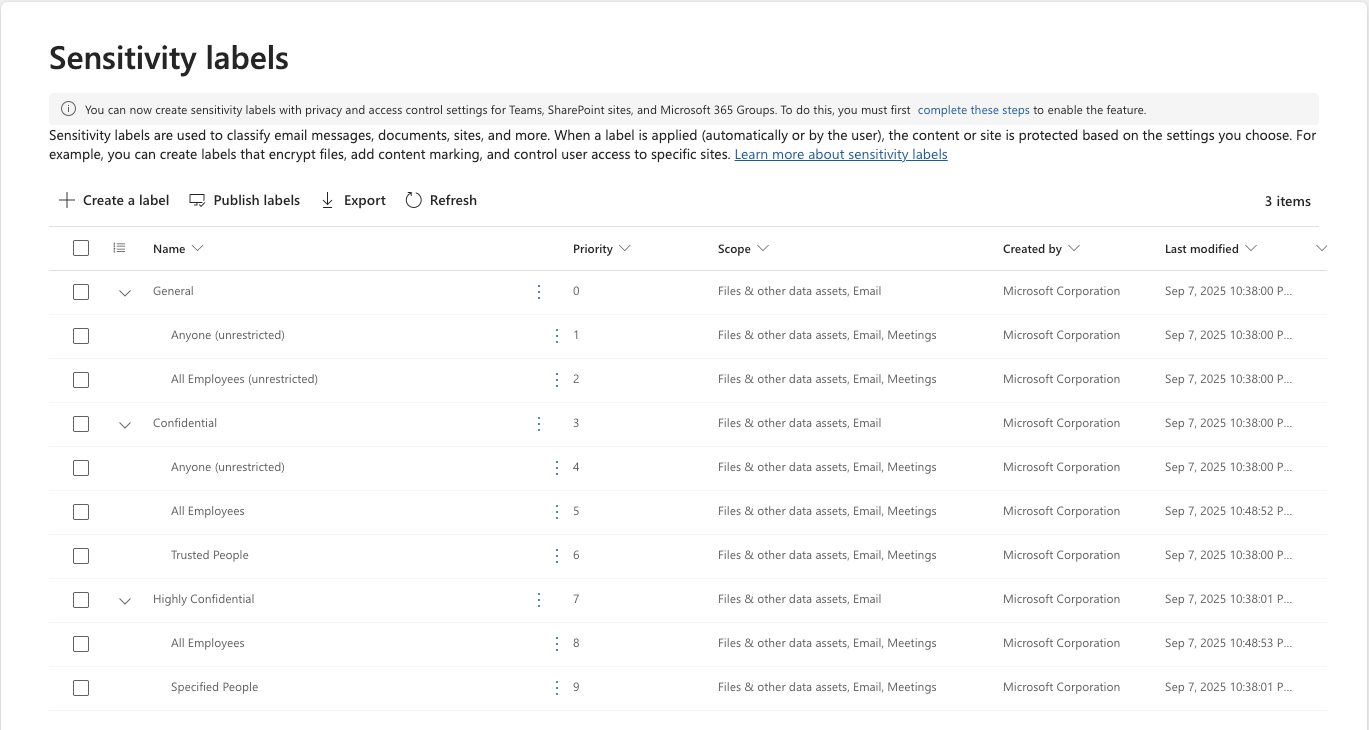

The sensitivity label baseline is as the following:

After deployment

The baseline sensitivity labels can now be used with:

- Publishing policies (make labels available to users)

- Manual labeling

- Auto-labeling (if licensed, e.g. Microsoft 365 E5)

Recommended deployment approach

- Review settings of all baseline labels.

- Map classifications to labels (e.g., GDPR/PII → Highly Confidential).

- Enable auto-labeling (if licensed).

- Define a default label for new items.

- Configure prompts for users to label unlabeled items on open.

- Establish a process for reporting issues and findings.

- Use a ring-based deployment

- Start with pilot users across different departments.

- Include staff who use third-party systems or rely on automation.

- Collect reports, fix issues, and validate effects.

- Expand rings until full deployment is reached.

Step 2: Configure AI-specific DLP Policies

Protecting sensitive data in Microsoft 365 Copilot requires Data Loss Prevention (DLP) policies. These policies prevent sensitive content from being surfaced in prompts or responses.

Two ways to create the DLP policy

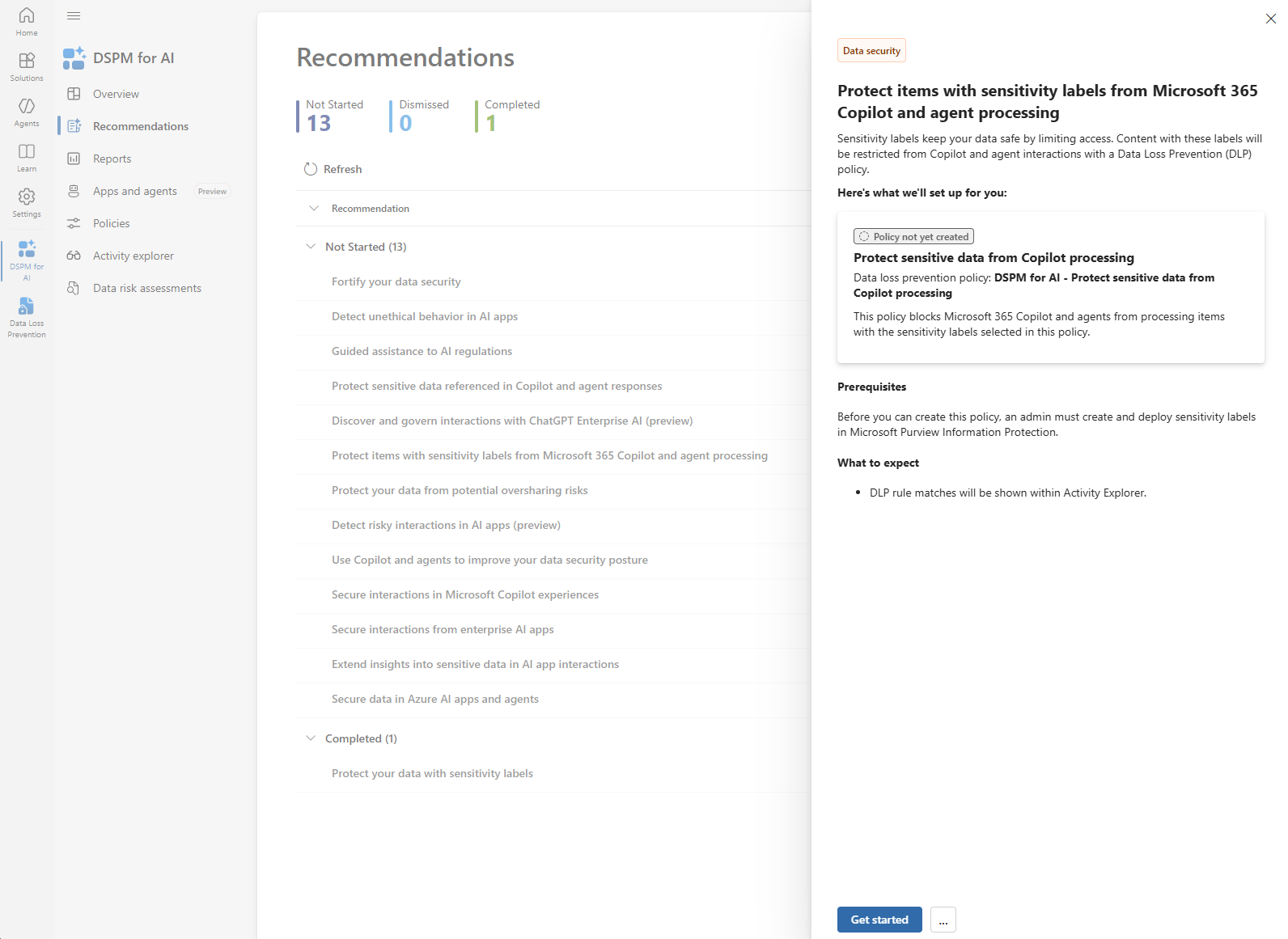

Option 1 - From DSPM for AI (Quick Wizard)

- Go to Purview portal → Solutions → DSPM for AI → Recommendations.

- Select Protect items with sensitivity labels from Microsoft 365 Copilot and agent processing.

- Complete the wizard to deploy the policy.

Important: This wizard can take up to 24 hours before the policy becomes visible and active.

Option 2 - From Purview DLP (Recommended for more control)

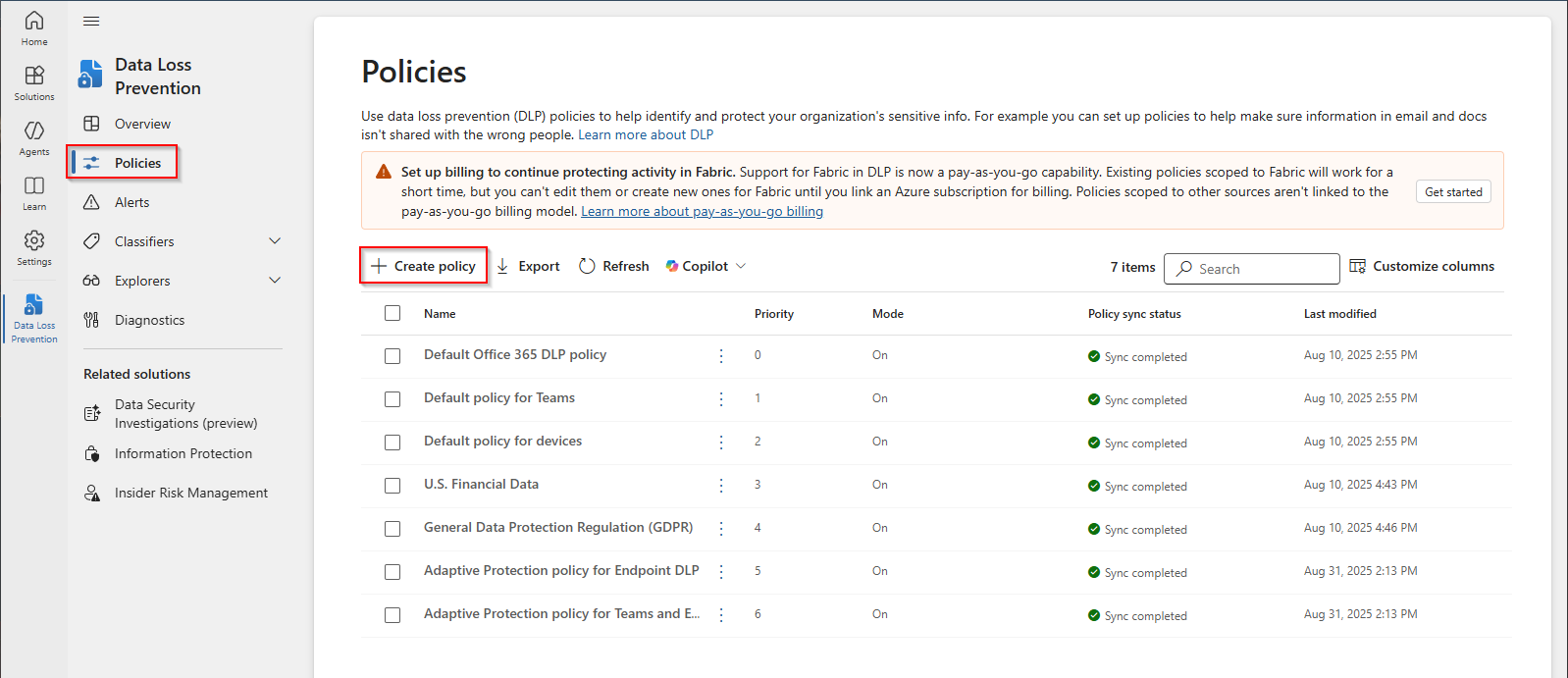

- Go to Purview portal → Solutions → Data Loss Prevention → Policies.

- Create a new DLP policy.

- Choose Microsoft 365 Copilot

- Use sensitivity labels as the condition (e.g., Confidential, Highly Confidential).

- Apply restrictions to Copilot prompts and responses

- Save and deploy. The policy takes effect immediately

Retention policies (for Copilot)

- Copilot includes its own prompt history retention settings.

- These retention policies control how long user prompts and Copilot responses are kept for compliance and auditing.

- They do not evaluate sensitivity labels or general content lifecycle.

- Configure these settings to align with your organization’s requirements for audit trails and privacy.

DLP Option 1 – From DSPM for AI (quick wizard)

- Go to the Microsoft Purview portal

- Navigate to Solutions → DSPM for AI → Recommendations

- Select Recommendation : Protect items with Sensitivity labels from Microsoft 365 Copilot and agent processing

- Select : Get started

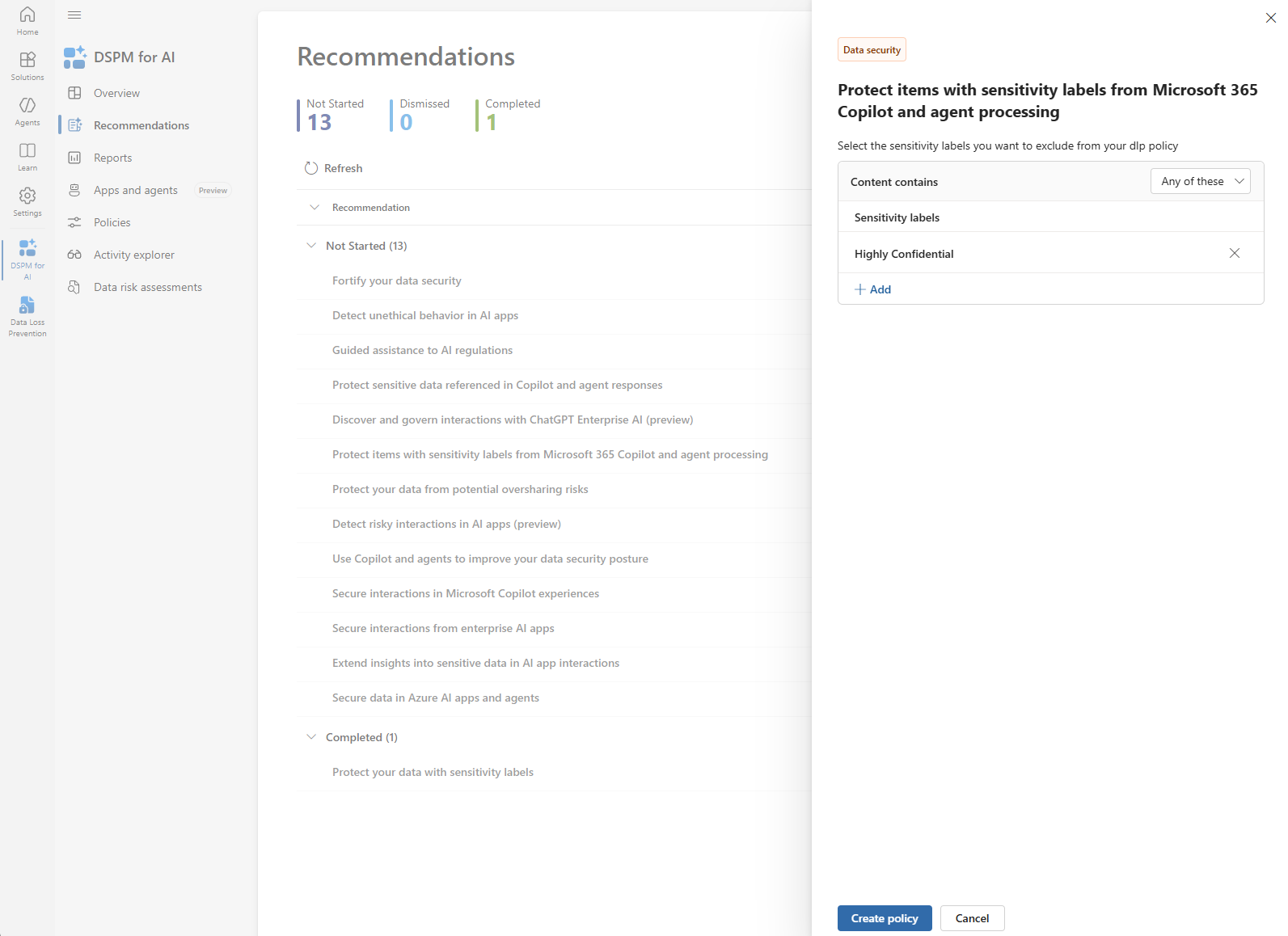

- Select Sensitivity Label

- Highly Confidential used in this example

- This relies on the sensitivity labels published in Step 1.

- Select Create Policy

DLP Option 2 – From Purview DLP (recommended for more control)

- Go to the Microsoft Purview portal

- Navigate to Solutions → Data Loss Prevention → Policies

- Select Create Policy

- Data to Protect

- Select Data stored in connected sources

- Skip Template or custom policy

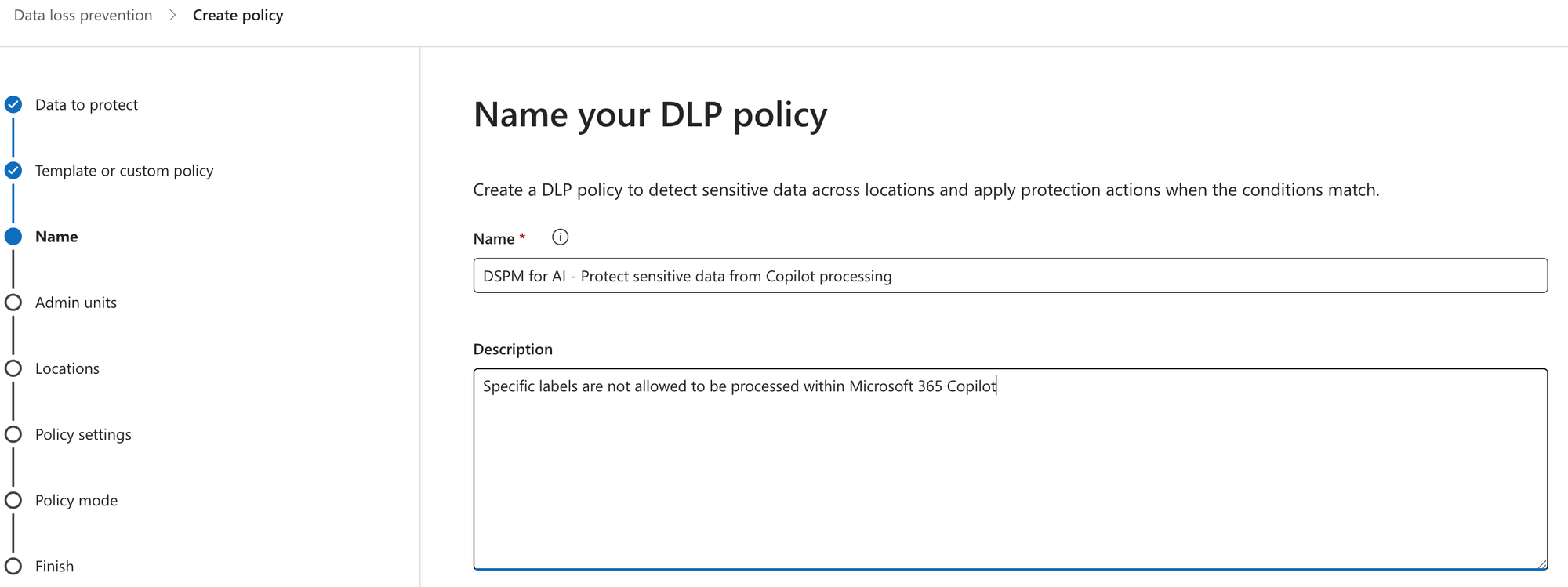

- Name the policy

- Skip admin units

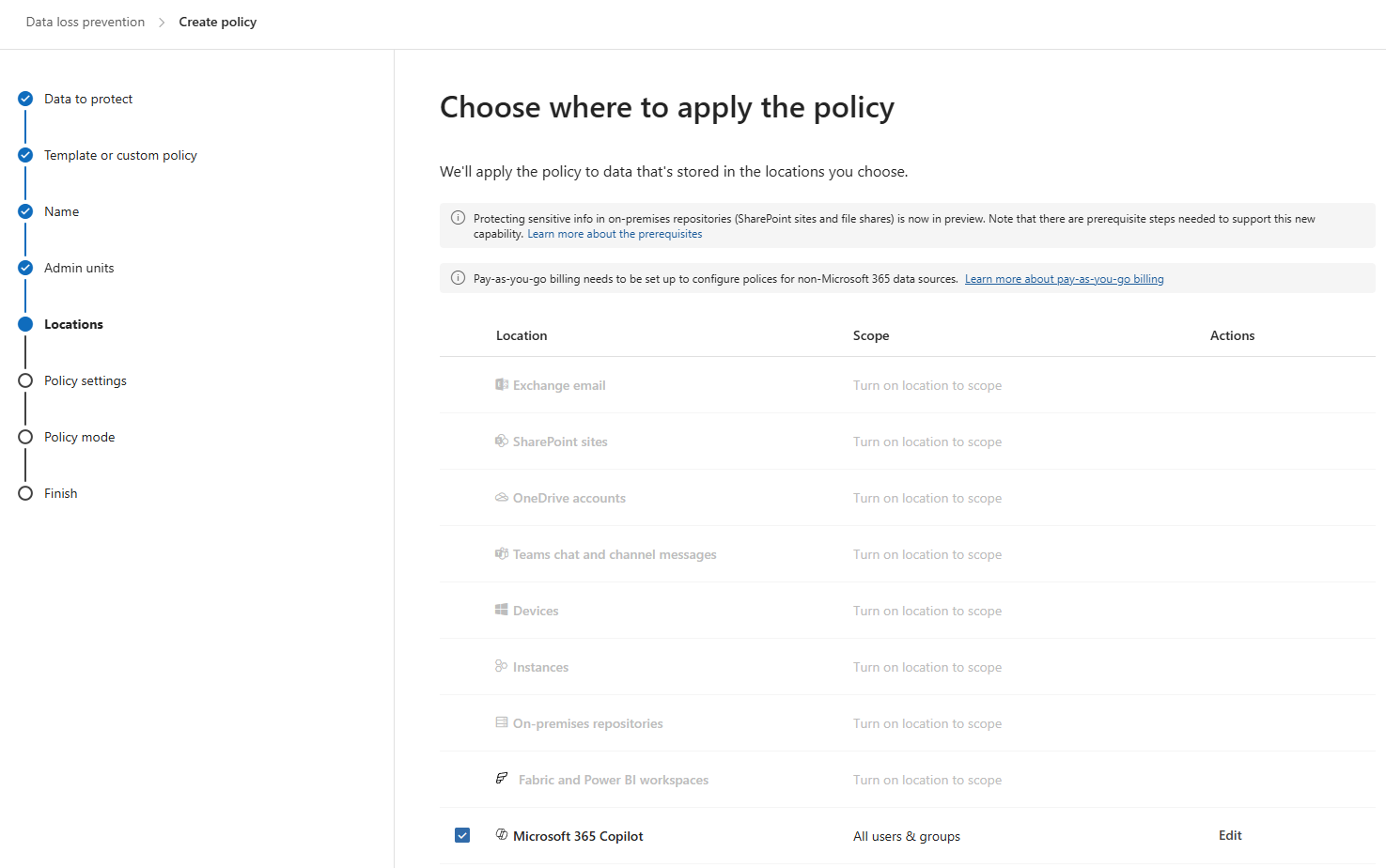

- Location

- select Microsoft 365 Copilot

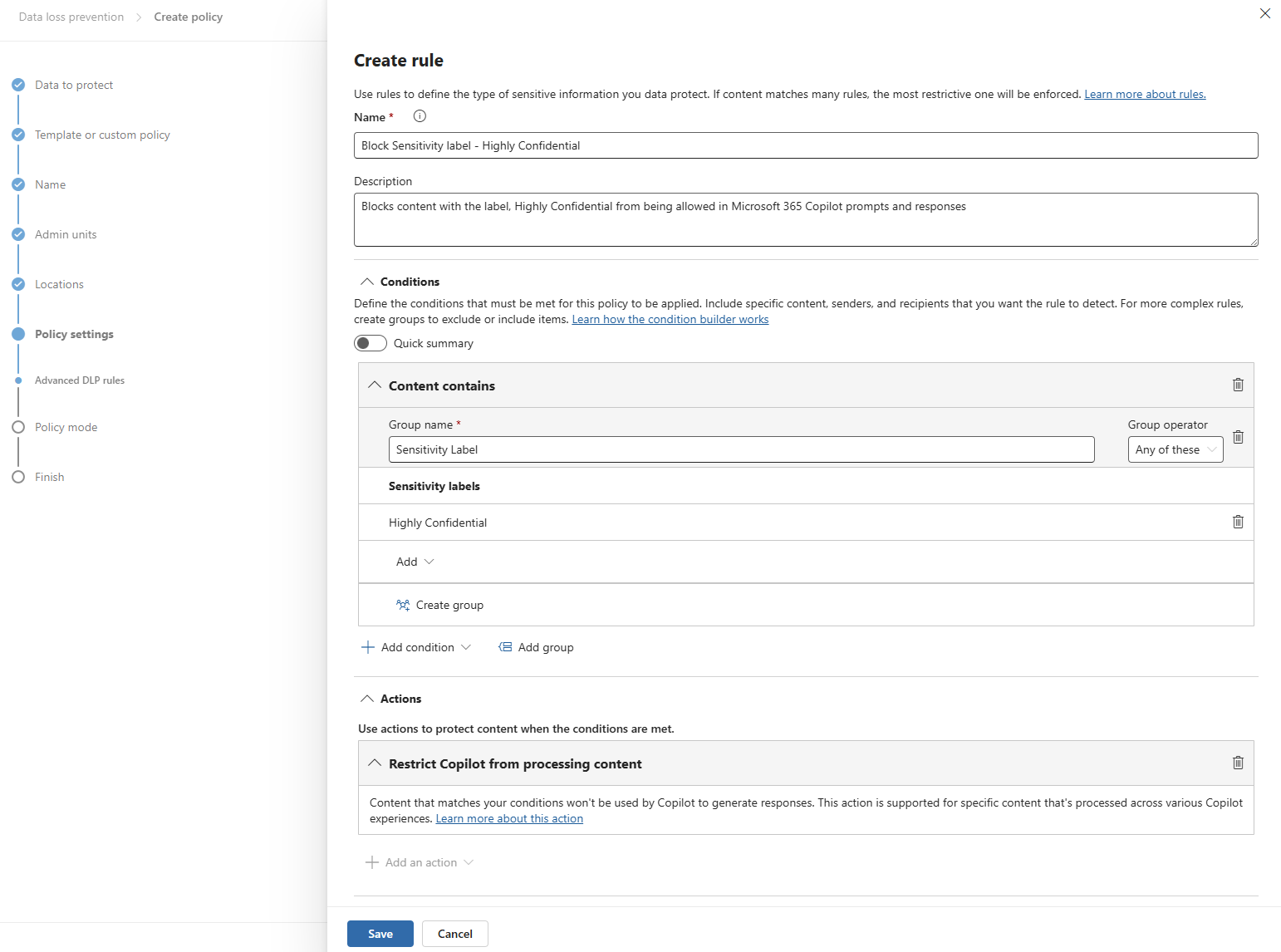

- Policy settings

- Create or customize advanced DLP rules

- Custom Advanced DLP rule

- Create rule

- Add condition

- Select Sensitivity label to be used in policy.

- Highly Confidential used in this example

- This relies on the sensitivity labels published in Step 1.

- Select Sensitivity label to be used in policy.

- Add Action

- Restrict Copilot from processing content

- Add condition

- Create rule

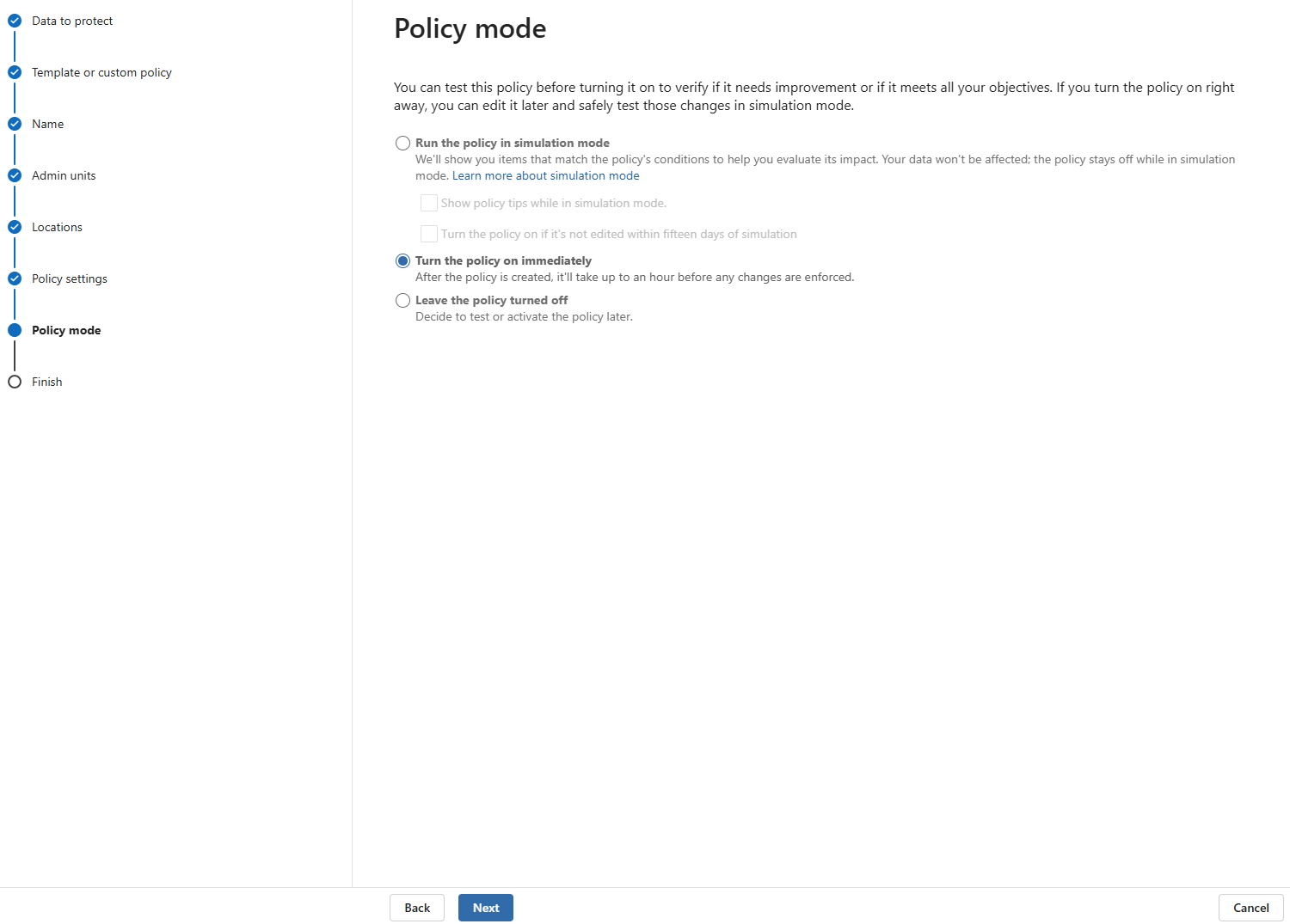

- Policy Mode

- Turn the policy on immediately

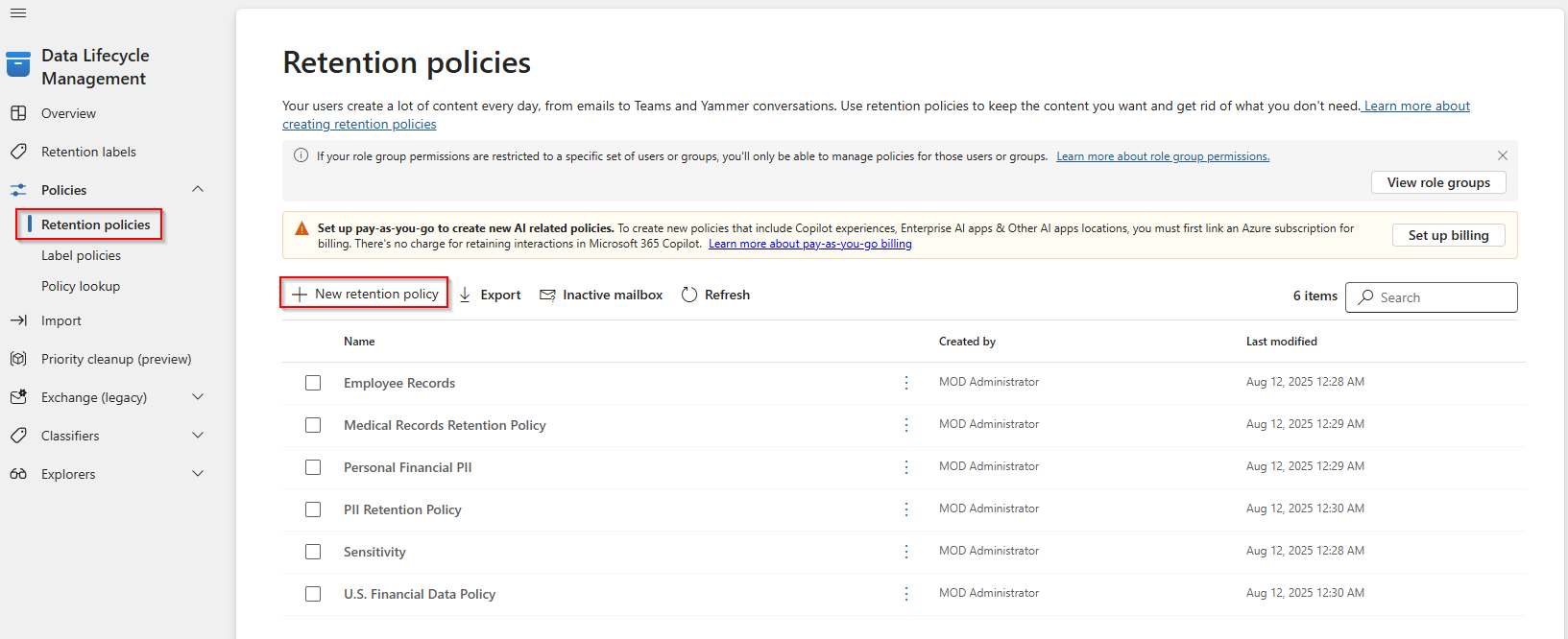

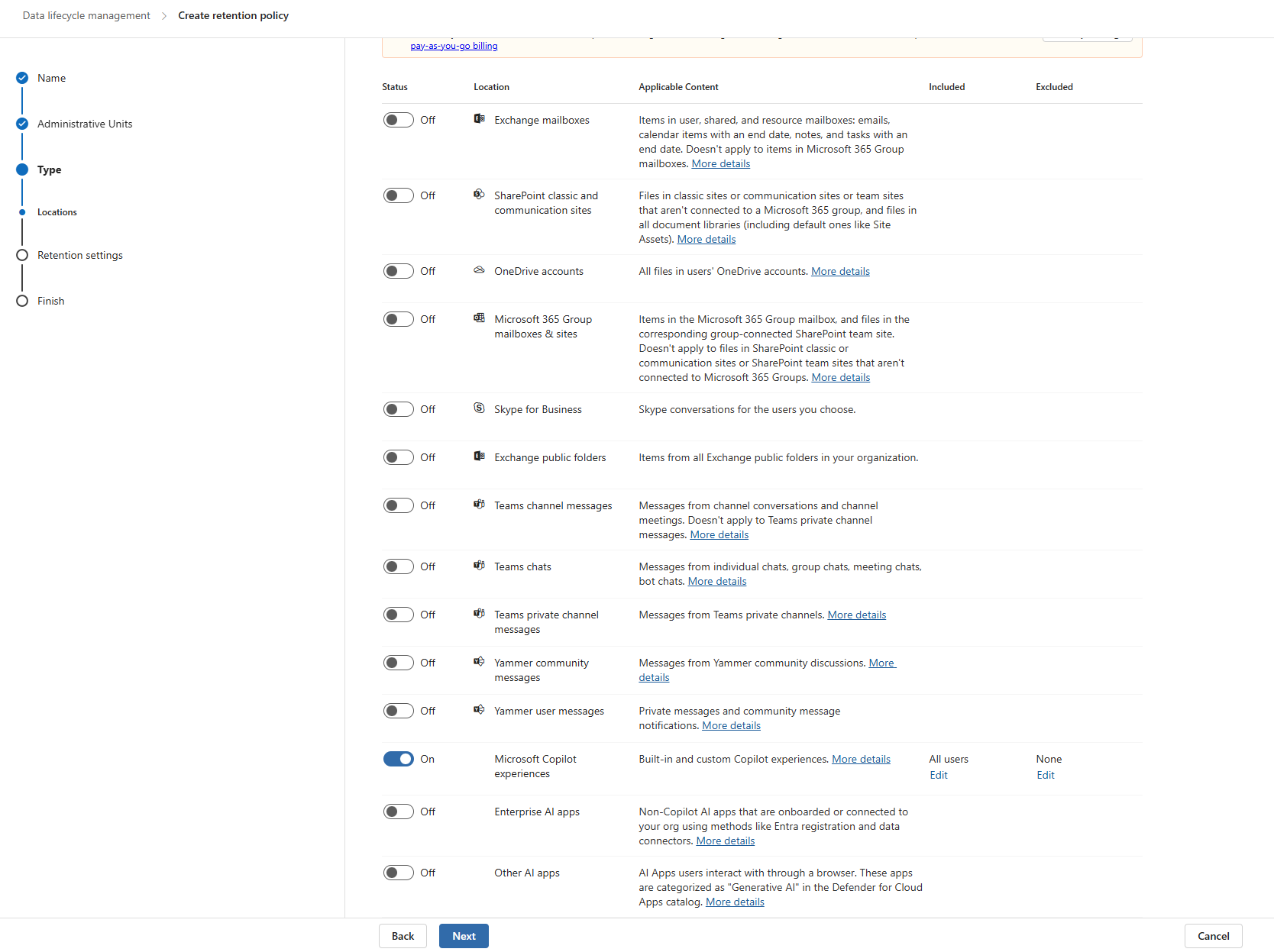

Step 3: Retention for Microsoft 365 Copilot Prompt History

It does not evaluate sensitivity labels or content lifecycle.

- Go to the Microsoft Purview portal

- Navigate to Solutions → Data Lifecycle Management → Policies → Retention Policies

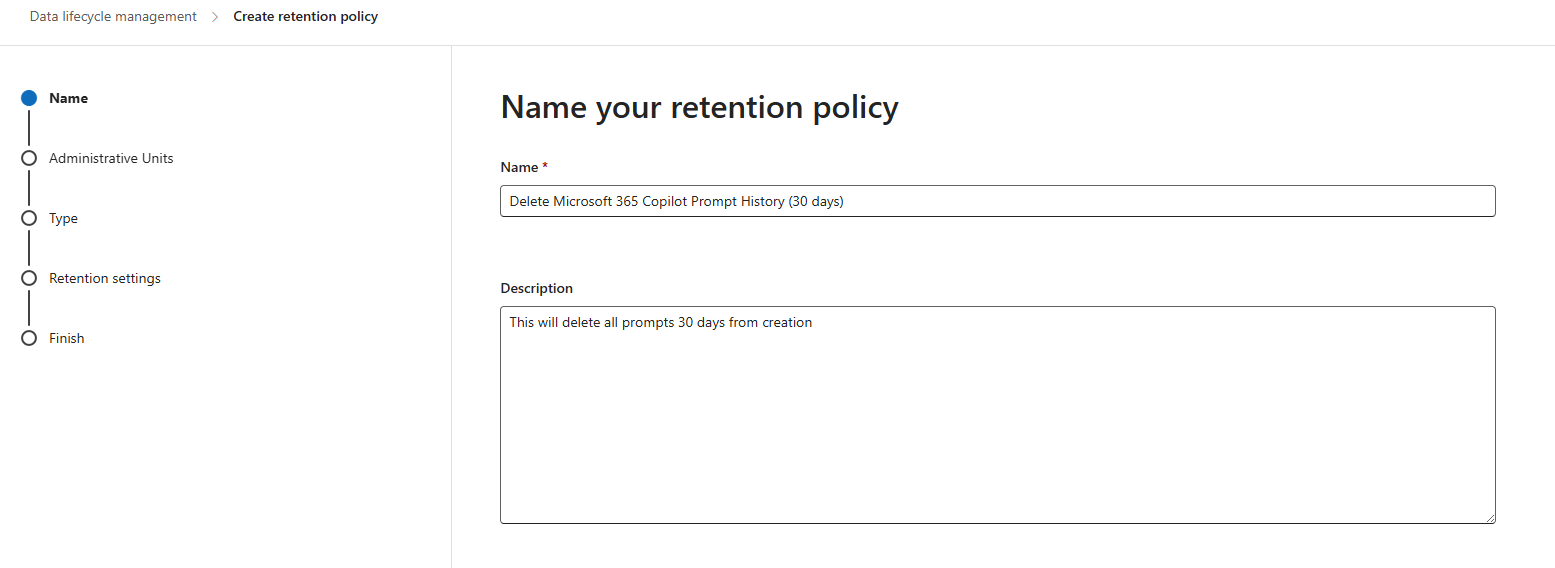

- Select New Retention Policy

- Name the policy

- Skip administrative units

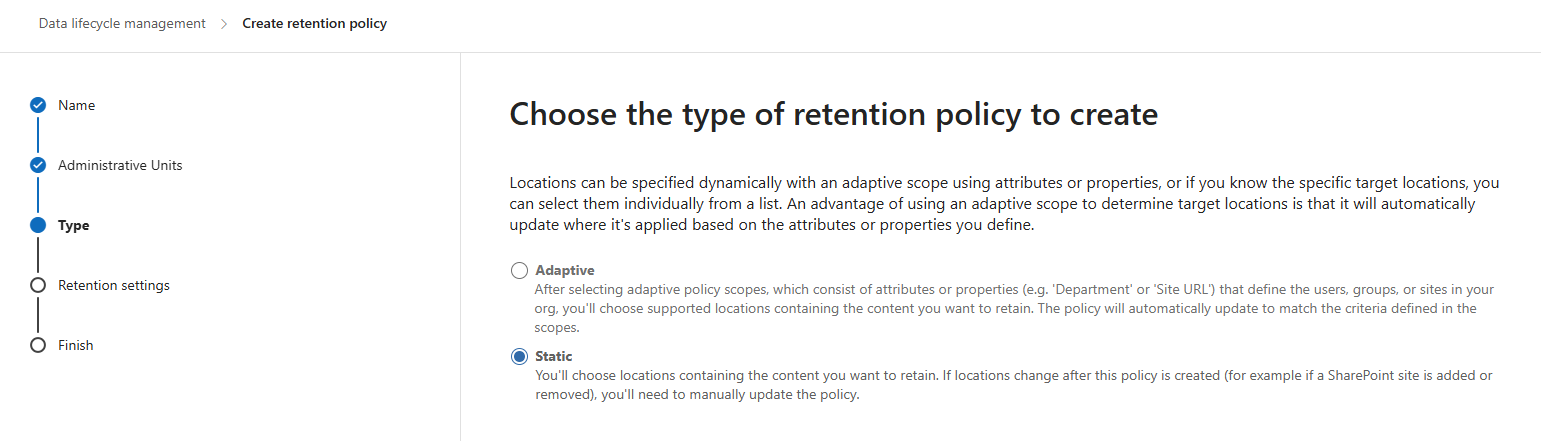

- Type

- Select Static

- Location

- Select Microsoft Copilot Experience

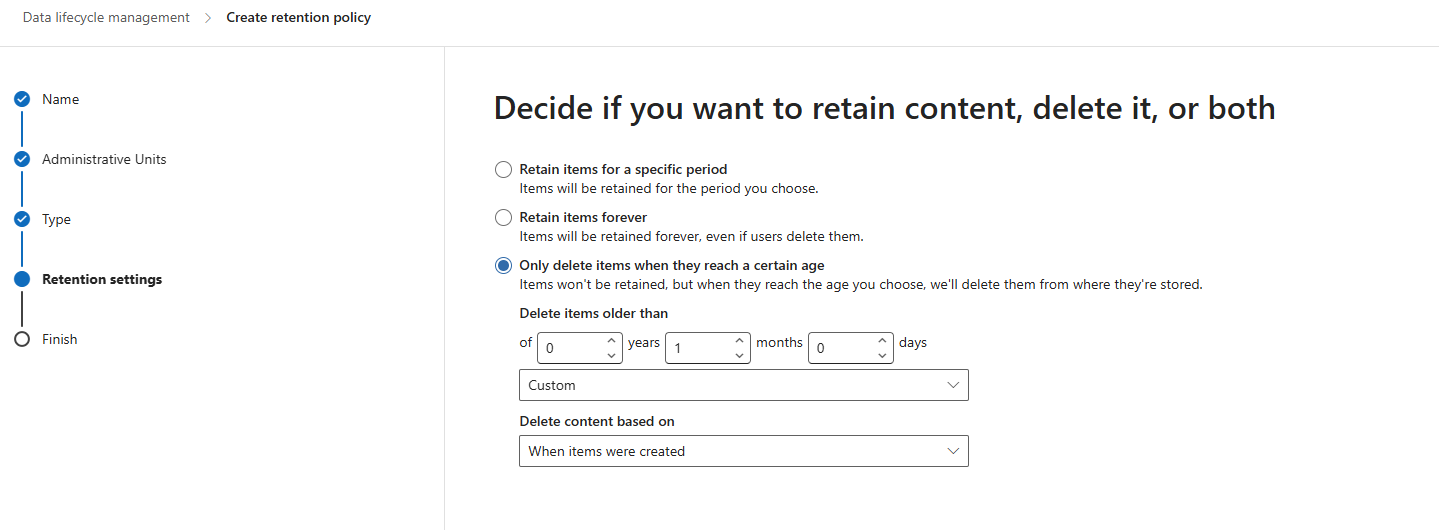

- Retention Settings

- Only Delete items when they reach a certain age

- In this example, its set to 1 month from when the prompt is created

- Tailor this to your specific business requirements

Wrap-up

With sensitivity labels published, Copilot-aware DLP policies deployed, and retention configured for prompt history, you now have the baseline needed to secure Microsoft 365 Copilot.

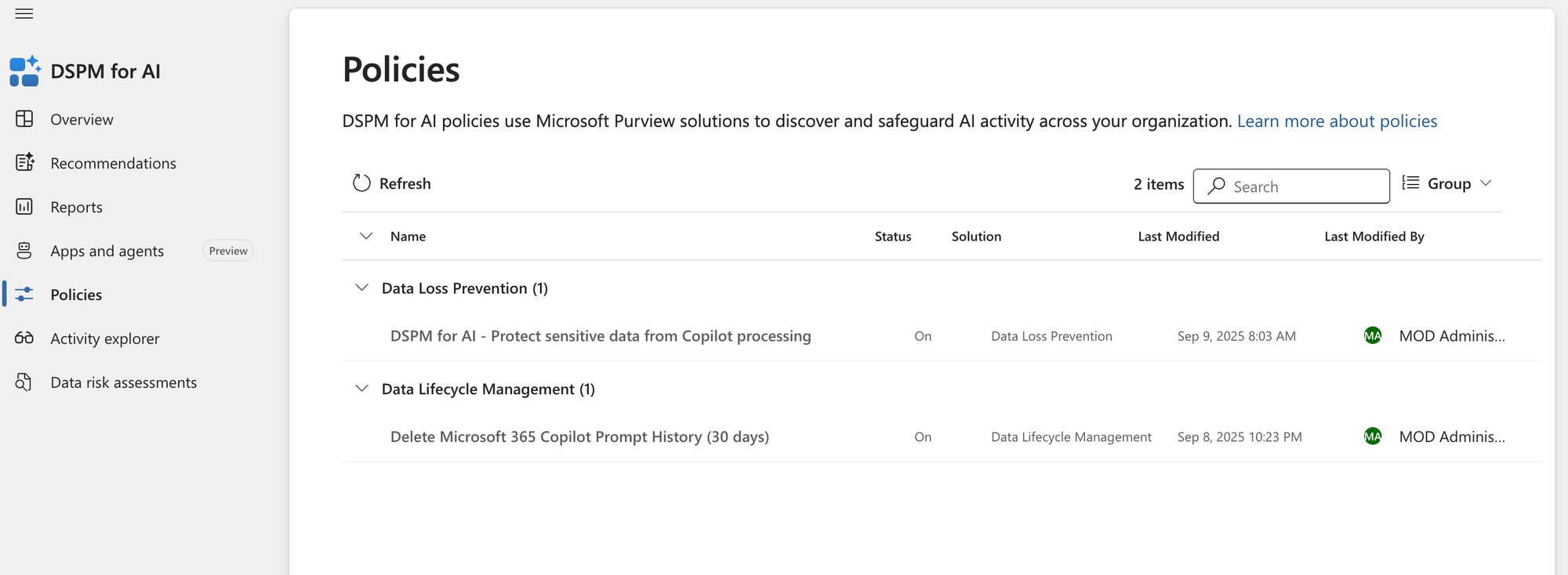

Within DSPM for AI, the Policy overview gives you a single place to confirm that these protections are active. In the example below, you can see the two policies we created are visible:

- A DLP policy that prevents Copilot from processing sensitive data

- A Retention policy that manages Copilot prompt history

This confirmation step closes the loop: your baseline policies aren’t just configured, they’re integrated into DSPM for AI where you can monitor them.

But deployment is only the beginning. The next challenge is understanding where sensitive data exists, how it’s shared, and what risks Copilot could expose if left unchecked.

With these policies in place and visible in DSPM for AI, you’ve established a baseline that makes Copilot safe to adopt at scale.

Copilot can now add value without exposing your most sensitive data — and you have the audit trail to prove it.